The Megalithic Portal is an incredible site for exploring ancient stone structures. I’m very into standing stones (menhir) in particular. Something about their permanence has the hypnotic quality of fire to me. On any given weekend May → September odds are that I’m standing out on a moor staring intently at a many-thousand-year-old Rock.

Anyway I digress. The Megalithic Portal is a fantastic resource for Rock Enjoyers, but their website has an extremely clunky raster map interface that only loads a subset of Rocks at once. So if you’re looking at a Rock in one part of the country but want to see a Rock in a different part of the country, you have to jump through Eleven Hoops to get there. I don’t want to jump through hoops though, I just want to Look at Rocks.

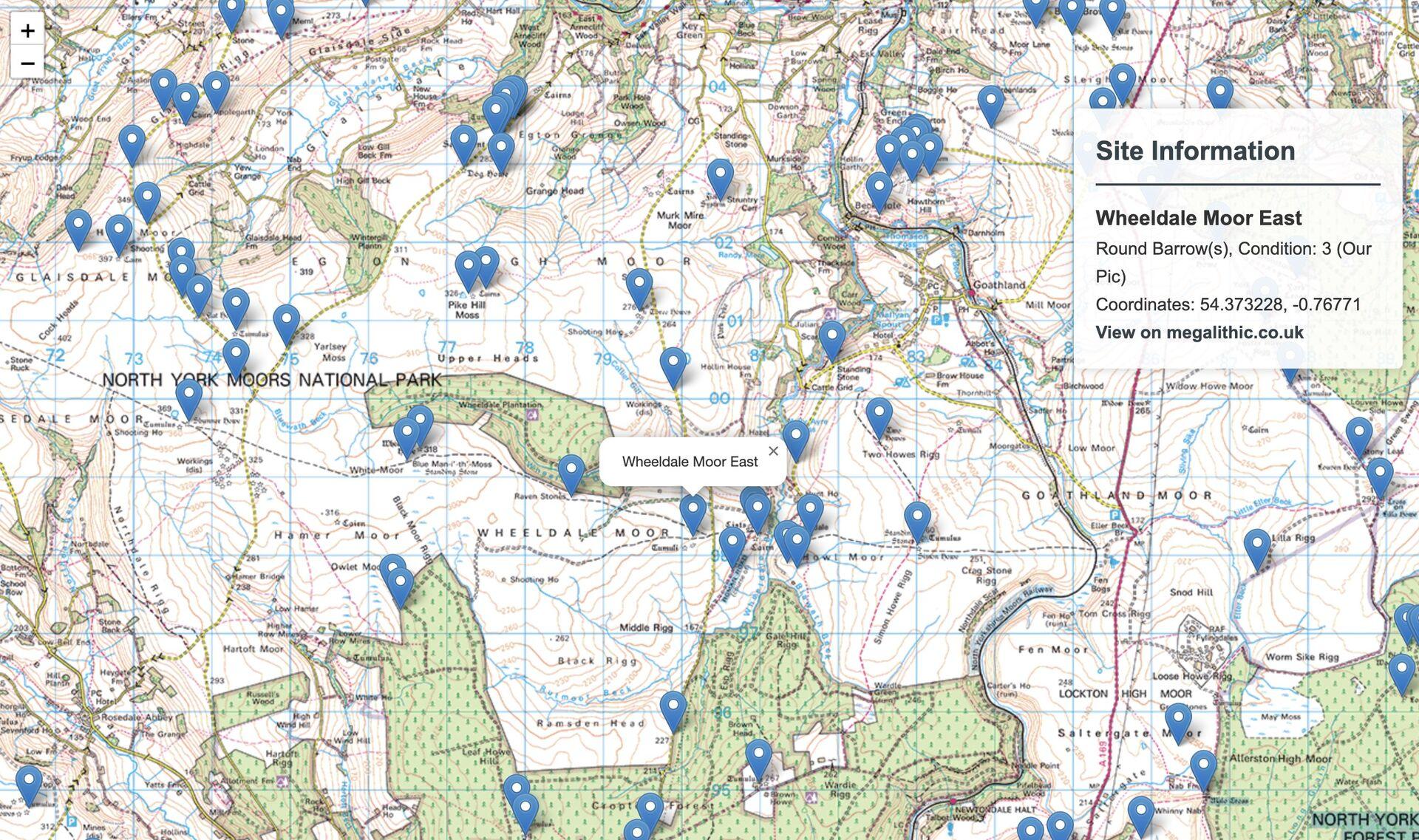

So I set out to make a map of my own.

The key discovery was that for each site on the Megalithic website, there’s a corresponding GeoRSS feed of nearby sites, with metadata for each nearby site including site name, latitude and longitude:

<item>

<title>Langleydale Common 8</title>

<link>https://www.megalithic.co.uk/article.php?sid=50089</link>

<description>Rock Art, (No Pic)</description>

<geo:lat>54.614212686267</geo:lat>

<geo:long>-1.9452625039514</geo:long>

<media:thumbnail url="images/mapic/tg29.gif" height="20" width="20" />

</item>

The URL from this feed is parameterised based on the site’s latitude and longitude, so from any given Rock feed, I can iterate over each of the items and generate URLs for each of the related Rocks—meaning that I can hop from one feed to the next, saving each new item to an SQLite database as I go.

A year ago I’d have said that sounded like a lot of work.

But we’re not living a year ago, so I got Claude Sonnet 3.7 (via Aider) to do it for me. It only took a little bit of prodding.

Okay, so now I have an SQLite database with a thousand or so barrows and tumuli and dikes and embankments and (yes) Rocks around where I live. But the whole point of this is to view all of the sites at once, without having to navigate the Megalithic Portal’s esoteric RSS-based raster maps and leap through their Eleven Hoops.

Back to Claude, to set up a simple HTTP server which reads all of the sites out of the database and plots them on a simple Leaflet map. It gets it in one. Boom roasted, I watch my own job evaporate like Thanos in front of me. What will my family think when they realise that I have been replaced by a robot named after a Beanie Baby.

In an attempt to make myself feel even remotely relevant I go sign up for an OS Maps dev account so that I can use the Ordnance Survey maps as the base layer; this takes me like 20 minutes. I try not to think of how few milliseconds it would have taken Claude.

The whole thing costs £0.54.